AI in QA Testing: From Bug Hunter to Pattern Spotter

AI in QA Testing is no longer just a futuristic idea, it’s the lifeline for testers, QA engineers, and tech leads drowning in late-night test failures, endless bug reports, and release deadlines that don’t budge. I know the feeling of staring at a broken script while the clock keeps ticking, wondering how to pull it all together before morning. QA often feels like a battlefield, full of vague requirements, brittle automation, and budgets that barely cover the essentials.

But here’s the shift: AI isn’t hype, it’s the tool that turns that chaos into clarity, making testing smarter, faster, and far less painful.

How to use AI in QA Testing?

AI in QA testing automates test case generation, enhances test automation, and predicts defects using tools like Testim, Selenium AI, and Applitools. Integrate AI into CI/CD pipelines to prioritize high-risk areas and reduce manual effort. Use NLP to align tests with requirements and detect anomalies in results. Start with a single tool, monitor performance, and scale gradually.

AI in QA testing refers to the use of artificial intelligence techniques such as machine learning, natural language processing, and predictive analytics to enhance software quality assurance. It helps QA teams automate repetitive tests, generate smarter test cases, predict defects earlier, and improve overall test coverage, enabling faster and more reliable software delivery.

What is AI in QA Testing?

Artificial Intelligence (AI) in Quality Assurance (QA) testing refers to the use of intelligent algorithms, machine learning, and natural language processing to improve the way software testing is done. Unlike traditional automation, which only follows pre-written scripts, AI enables testing tools to learn from data, predict errors, and adapt to changes in the application.

This makes testing faster, smarter, and more reliable for modern software systems.

AI in Software Quality Assurance

Enterprises are rapidly adopting Artificial Intelligence (AI) in Quality Assurance (QA) to meet the growing demands for faster, more reliable, and cost-effective software delivery.

AI-driven QA empowers companies to transform their testing processes and stay competitive in the digital economy.

- AI-powered testing tools automate repetitive QA tasks, freeing teams to focus on complex scenarios and reducing overall testing time. This leads to faster product releases and shorter development cycles, which are crucial for business growth.

- Using AI algorithms increases the accuracy of test results by minimizing human errors and ensuring consistent execution. Enhanced test reliability helps organizations catch hidden bugs before they become costly production issues.

- With AI, enterprises can test software at scale, analyzing large datasets, simulating diverse user behaviors, and covering edge cases that are often missed in manual testing. This scalability ensures broad test coverage, even for complex or rapidly evolving applications.

- AI-driven automation significantly lowers QA costs. By cutting down manual labour and identifying defects early, businesses save on remediation expenses and reduce the need for costly rework late in the development process.

- Predictive analytics with AI enables early risk identification. It can analyze historical test data, user patterns, and system logs to forecast potential failures, allowing proactive mitigation instead of reactive problem-solving.

- AI tools enhance collaboration between QA, development, and operations teams. Natural language interfaces and analytics dashboards make quality metrics accessible to all stakeholders, aligning efforts and streamlining DevOps processes.

- Embedding AI in QA supports DevOps goals like continuous integration and deployment (CI/CD). Automated, intelligent testing keeps up with rapid code changes, improving release reliability and reducing bottlenecks in the pipeline.

AI QA Automation Tools to Know in 2026

AI-powered QA tools are transforming software testing in 2025 by bringing intelligence, automation, and actionable analytics to every stage of the quality assurance process. These platforms enable QA teams to work faster and smarter, reducing manual effort and making testing more robust, even as applications rapidly evolve.

Below you’ll find a user-focused, purpose-driven breakdown of standout AI QA tools, each explained for practical comparison and ease of adoption.

Test Automation with AI

- Testim

- Lets you create, execute, and maintain test automation using advanced AI algorithms.

- Its self-healing features automatically update tests when the UI changes, so manual intervention is minimal.

- Includes smart locators that adapt to element changes and seamless CI/CD integrations for automated pipelines.

- Especially effective for large test suites, with analytics and reporting dashboards that give insights beyond simple pass/fail.

- Mabl

- Focuses on unified web and API testing with AI-driven change detection.

- Alerts teams to visual regressions and the impact of changes in real time.

- Tests auto-heal across software releases, reducing the need for constant script updates and lowering test flakiness.

- Functionize

- Delivers intelligent test creation and maintenance via machine learning.

- Generates robust test scripts using plain English and automatically adapts to UI changes.

- Offers cloud-based scalability, empowering teams to manage vast test scenarios quickly.

Visual Testing

- Applitools

- Uses Visual AI to spot discrepancies and changes in the application’s UI that might be missed by traditional, code-based tests.

- Can validate entire visual layouts, ensuring design consistency across browsers and devices.

- Integrates easily with leading test automation frameworks and CI pipelines for effortless adoption.

- AskUI

- Specializes in visual UI testing, even on complex or legacy applications where DOM-based selectors aren’t reliable.

- Implements natural language test instructions and automates everything users can see on-screen, not just web elements.

- Great for cross-platform visual validation and scenarios where visual regression matters most.

NLP-Based Test Authoring

- Test.ai

- Designed for mobile QA, it generates and runs tests by interpreting app features and user flows.

- Utilizes AI bots to simulate real user behavior, enabling thorough usability and functional coverage.

- Particularly efficient at catching mobile-specific issues quickly, even as the app evolves.

- TestSigma

- Empowers you to write automation scripts in simple English—no coding needed.

- Ideal for teams who need fast test case creation and easy adaptation to UI changes.

- Dynamic locators keep tests robust and resilient as the UI updates, making it a strong fit for fast-moving dev teams.

Predictive Analytics Platforms

- ReportPortal

- Centralizes test results and provides AI-powered analytics that highlight risks, unstable tests, and recurring failures.

- Accelerates decision-making by presenting actionable insights instead of just raw test logs.

- Supports major automation frameworks and integrates with popular CI/CD tools.

- Launchable

- Uses historical test results, code updates, and machine learning to predict which tests are most likely to catch new defects.

- Allows teams to run only the most relevant test cases, reducing test cycle time without increasing risk.

- Improves resource allocation and reduces unnecessary work, especially valuable for larger QA organizations.

Why do QA Teams use Predictive Metrics?

QA teams use predictive metrics to shift from reactive bug-fixing to proactive risk management, using historical data to forecast likely defects, prioritize high-risk areas, optimize limited resources, catch issues earlier, reduce costs, and speed up releases, leading to better quality software and faster time-to-market.

Key reasons QA teams use predictive metrics:

- Early Defect Detection: Identifies components prone to bugs before they fail in production, enabling preventative action and avoiding expensive post-release fixes.

- Smarter Resource Allocation: Focuses testing efforts and budget on the riskiest parts of the application, maximizing efficiency and test coverage without exhaustive testing.

- Faster Time-to-Market: By pinpointing issues early and streamlining testing, teams can release features and updates more quickly and confidently.

- Improved Software Quality: Catches defects earlier in the development cycle, leading to more stable, reliable, and higher-quality products.

- Cost Savings: Prevents costly rework, emergency patches, and customer-facing failures, reducing overall development and maintenance costs.

- Data-Driven Decisions: Provides insights for confident release decisions, helping teams understand risk levels and know when a product is ready for deployment.

- Proactive Risk Management: Moves QA from a reactive gatekeeper role to a strategic advisor, aligning testing with business goals and user needs.

How it works:

Predictive QA leverages machine learning and AI to analyze data from code commits, test runs, past defects, and user feedback to find patterns that signal future problems, creating a more intelligent and efficient testing process.

AI Contextual Organizational Knowledge Validation in QA

AI contextual organizational knowledge validation in Quality Assurance (QA) is the process of ensuring that AI systems' outputs or proposed initiatives align with a company's specific business logic, context, and operational environment, often using a "human-in-the-loop" (HITL) approach.

This type of validation is crucial because AI models, which primarily identify patterns and correlations in data, lack inherent human judgment and contextual understanding. Human experts must bridge the gap between data-driven efficiency and real-world relevance.

Key Aspects and Methods

- Human-in-the-Loop (HITL) Validation: This hybrid approach is a cornerstone of contextual validation. AI handles data processing and pattern recognition, while human experts (QA professionals, subject matter experts) review and validate the outputs based on their situational awareness and domain knowledge. This feedback loop helps the AI system learn what constitutes a genuine issue versus expected behavior within a specific organizational context.

- Business Problem and Context Validation: Before any AI project is scaled, organizations must systematically evaluate if the AI solution addresses a genuine business problem and fits within organizational constraints and goals. This involves confirming the AI is appropriate for the problem (rather than a simpler alternative) and that the necessary data and infrastructure are available.

- Validation of AI-Generated Content/Decisions: In QA workflows, AI is used for tasks like generating test cases, defect prediction, and code review. Validation ensures these AI outputs meet organizational standards for:

- Accuracy and Relevance: Verifying that AI-generated information is factually correct and aligns with the specific user query or intended message.

- Bias and Ethics: Assessing content for potential biases to ensure fairness and adherence to ethical standards, which often requires human judgment to interpret nuances.

- Compliance: Ensuring AI outputs and the systems themselves comply with industry-specific regulations (e.g., FDA guidelines in life sciences).

- Continuous Learning and Refinement: Validation is an ongoing process, not a one-time event. Feedback from human validation is used to continuously refine the AI models, reducing false positives/negatives and improving their contextual relevance over time.

- Integration with Existing Workflows: For successful adoption, AI validation must integrate seamlessly with existing tools and workflows (e.g., Jira, test automation frameworks). This ensures that validation results can trigger necessary actions and align with current team processes.

Ultimately, AI contextual organizational knowledge validation transforms QA from a reactive process to a proactive, intelligent system that blends machine efficiency with invaluable human judgment to ensure high-quality, relevant outcomes.

Key Benefits of Using AI in QA Testing

AI is changing the way enterprises manage quality assurance (QA). Traditional testing often struggles with speed, coverage, and reliability. By integrating AI into QA pipelines, organizations achieve higher efficiency, better accuracy, and faster release cycles.

Below are the core benefits that AI brings to modern QA practices:

Improved Test Coverage

- AI tools analyze huge volumes of production and user data to identify critical usage patterns.

- This helps teams create test cases that reflect real-world scenarios with far more accuracy.

- As a result, enterprises ensure that edge cases and less obvious workflows also get tested.

- Broader coverage directly reduces the chances of defects slipping into production.

Smarter Test Case Generation

- Generating test cases manually is time-consuming and often incomplete.

- AI automates this by scanning requirements, code changes, and past defect data.

- Machine learning models then suggest and prioritize meaningful test cases.

- This reduces human error and keeps the test library updated with evolving business needs.

Flaky Test Detection and Reduction

- Flaky tests create noise by failing inconsistently even when the code is correct.

- AI models study execution history and system behavior to quickly flag unstable tests.

- Once identified, teams can debug the root issue or replace unreliable scripts.

- This brings stability to CI/CD pipelines and builds trust in automated testing.

How to use AI in Quality Assurance?

Artificial intelligence is transforming how quality assurance (QA) teams test, analyze, and release high-quality software. AI brings speed, accuracy, learning, and adaptability to every stage in the QA workflow.

Here’s a step-by-step look at how AI fits into modern QA processes:

- AI analyzes requirement documents and user stories automatically.

- It extracts critical test conditions and features, saving hours of manual analysis.

- The tool flags inconsistencies or unclear requirements early to reduce downstream errors.

- AI-powered platforms optimize test case selection.

- They help prioritize high-risk and frequently-used modules that need more test coverage.

- Test coverage gaps are identified using pattern recognition and historical defect trends.

- During test planning, AI models suggest resource allocation.

- These models predict the optimal mix of manual and automated testing.

- AI factors in release timelines, test complexity, and past project data to make recommendations.

Intelligent Test Design

- AI-driven tools auto-generate test cases from requirements and code.

- They use natural language processing to translate requirements into executable tests.

- The system updates test cases if requirements or code change, reducing manual rework.

- Test data is created intelligently using AI.

- Synthetic data generation allows for more realistic, privacy-safe test scenarios.

- The data is varied to ensure broader test coverage, reducing blind spots.

Smart Test Execution and Monitoring

- AI-powered automation frameworks select the right set of tests to run.

- Selection is based on code changes, risk analysis, and historical failures.

- This focused testing reduces execution time but keeps quality high.

- Real-time monitoring uses AI to detect anomalies.

- It spots unexpected execution patterns, flakiness in tests, or environment issues instantly.

- The system alerts QA teams before these issues impact releases.

Adaptive Test Maintenance

- AI tracks code changes and updates tests accordingly.

- Broken scripts caused by code refactoring are automatically adapted by AI systems.

- Test case relevancy is checked, and obsolete cases are flagged for deletion.

- Maintenance workloads are predicted and prioritized by AI analytics.

- Teams can focus on the most critical or frequently impacted test cases.

By integrating AI into each QA phase, organizations gain faster feedback, higher test accuracy, and the agility required for frequent releases. AI doesn’t replace testers, it empowers them to focus on strategic, value-adding work while routine tasks are automated

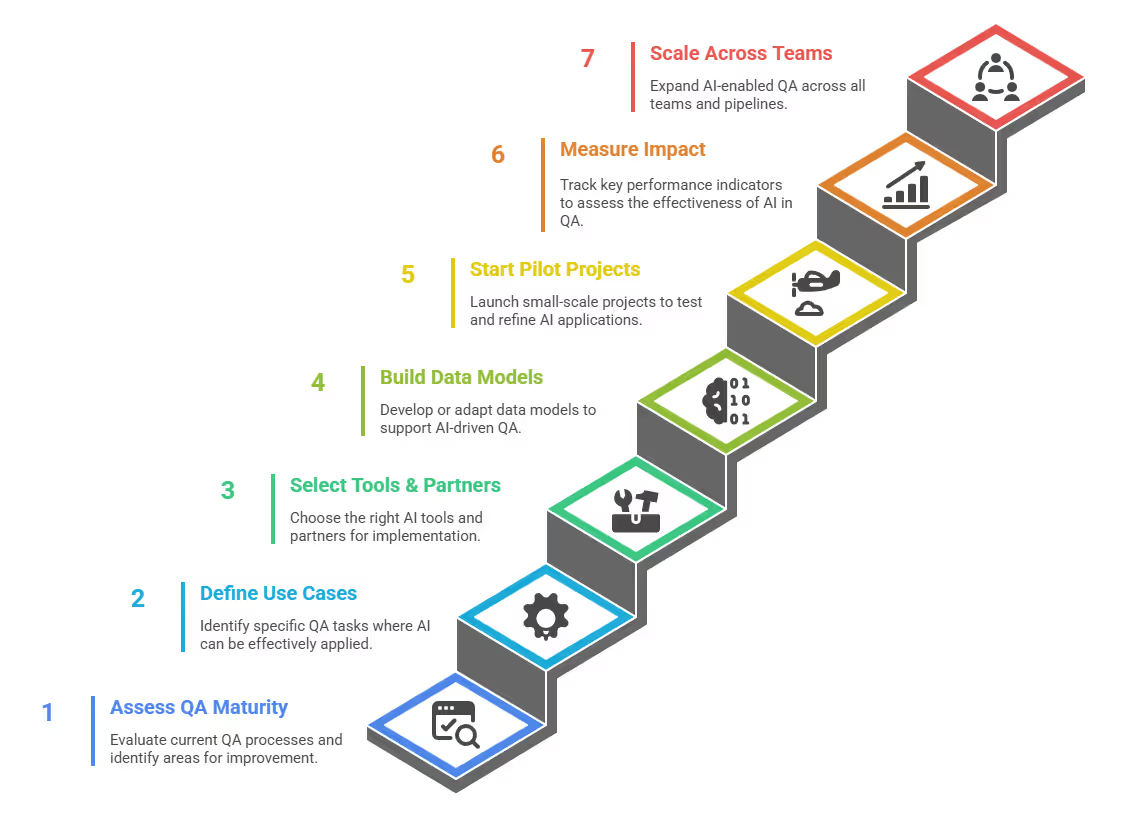

How to Implement AI in Your QA Strategy

AI is changing how software testing and quality assurance (QA) are done. Instead of relying only on manual and rule-based processes, QA teams can now use AI to test smarter, faster, and more accurately. But adopting AI is not about buying a tool and switching it on, it needs a clear strategy.

The following steps help tech leaders roll out AI in a structured and scalable way.

1. Assess Current QA Maturity

Before bringing AI into the QA process, understand where you stand today.

- Review your existing QA workflows, how much is manual, how much is automated, and where the bottlenecks are.

- Check for gaps in test coverage, defect leakage, or long cycle times.

- Rate your current maturity level: ad-hoc testing, partly automated, or well-structured with CI/CD integration.

- Identify areas where human effort is high but repetitive,these are often the best starting points for AI.

2. Define AI-Ready Use Cases

Not all QA problems need AI. Start where it makes sense.

- Look for tasks where pattern recognition, prediction, and automation matter, like test case generation, defect prediction, or log analysis.

- Prioritize use cases that lower costs or speed up release cycles.

- Keep business objectives in mind, whether reducing time-to-market, cutting defect leakage, or improving user experience.

- Build a roadmap that outlines quick wins along with long-term opportunities.

3. Select the Right Tools and Partners

Technology choices will decide how smooth your adoption is.

- Compare AI-driven QA tools for features like self-healing test scripts, test case recommendations, and defect clustering.

- Check vendor credibility, integration options, and scalability for enterprise environments.

- Decide between open-source platforms, commercial solutions, or a mix of both based on your internal expertise.

- Partner with vendors or consultants who have proven case studies in enterprise-scale AI QA adoption.

4. Build or Train Data Models (or Use Pre-Trained Ones)

AI in QA depends on data. The smarter the model, the better the results.

- Review your historical QA data, past test cases, defect logs, production incidents, to see if it’s usable for training.

- If clean, labeled data is limited, consider using pre-trained models from vendors and adapt them to your environment.

- Train models on your real-world data to capture application-specific patterns.

- Continuously retrain and improve models as your applications and systems evolve.

5. Start with Pilot Projects

Go small before you go big.

- Choose a project with manageable scope but enough complexity to prove AI’s value.

- Assign a focused team that includes both QA experts and data/AI specialists.

- Set clear success metrics, like reduction in test execution time or improved defect detection.

- Document lessons learned to refine the approach before scaling.

6. Measure Impact with KPIs and Metrics

AI in QA has to show real value, not just buzz.

- Track KPIs like defect detection rate, test coverage, cycle time, and overall release quality.

- Compare these metrics before and after AI adoption to prove ROI.

- Include business outcomes, like fewer production incidents or faster time-to-market in reporting.

- Share results with leadership and teams to build confidence and secure buy-in.

7. Scale Across Teams and Pipelines

Once pilots succeed, it’s time to expand.

- Integrate AI-enabled QA into your CI/CD pipelines for continuous testing.

- Standardize frameworks, tools, and processes so teams don’t work in silos.

- Train your QA engineers to combine traditional skills with AI-driven methods.

- Expand use cases step by step, moving from regression testing and defect triage to predictive quality analytics.

✅ Pro Tip: Scaling AI in QA is not just about automation. It’s about building a learning system that improves over time, aligns with your DevOps strategy, and adapts to new applications.

What's Next

AI in Quality Assurance is not just a trend, it’s reshaping how teams deliver reliable software at speed. By blending automation with intelligence, AI enables faster defect detection, predictive insights, and smarter test coverage. However, the real value comes when organizations adopt AI as a partner to human testers, not a replacement.

Ready to Transform Your QA Process with AI?

Explore leading AI testing tools or consult with experts to see how intelligent automation can boost your team’s productivity. Whether you’re just beginning or scaling enterprise-wide, adopting AI-powered QA unlocks faster releases and higher software quality.

Take the next step and start optimizing your QA strategy today!